FINANCE

The Cost Efficiency Era

AWS added a Cost efficiency score inside Cost Optimization Hub. On paper, that sounds like progress: a standardized metric, refreshed daily, tied to specific savings opportunities.

In practice, this score is useful in the same way a flashlight is useful. It helps you see what it points at. It does not help you see the whole room.

Cost efficiency, as AWS defines it, reflects how much optimization AWS can currently identify and quantify across a specific set of services and recommendation types. That visibility can absolutely be helpful. It can surface common inefficiencies, highlight missed commitment opportunities, and create a shared starting point for conversations.

At the same time, it only tells part of the story.

Efficiency in cloud environments is rarely a destination. It is an ongoing process of understanding how architecture, usage patterns, and business demand interact over time. This score is a step in that journey. It just is not the whole map.

What AWS is measuring with Cost efficiency

The Cost efficiency score is based on a simple relationship: Potential Savings relative to Total Optimizable Spend.

Potential Savings comes from the recommendations AWS can generate through Cost Optimization Hub. These include actions like rightsizing, identifying idle resources, and suggesting commitment purchases such as Reserved Instances and Savings Plans.

Total Optimizable Spend represents the portion of your AWS spend that falls within the services and recommendation models AWS currently supports. It is calculated using net amortized costs, which smooth upfront commitment purchases over time and exclude credits and refunds.

This framing is important. The score is not measuring “how efficient your environment is” in a broad sense. It is measuring how much detectable optimization remains within AWS’s current recommendation scope.

That distinction explains both why the metric is useful and why it can feel incomplete.

What the score cannot see

A Cost efficiency score can indicate that there is optimization work available within AWS’s model. It cannot fully describe how efficient an environment is end to end.

There are a few reasons for that.

Coverage has boundaries

If a cost driver sits outside the supported services list, outside supported recommendation types, or outside how AWS models savings, it simply does not register in the score. That does not mean the cost is small or unimportant. It just means it is out of view.

Architecture-level inefficiency often looks “normal”

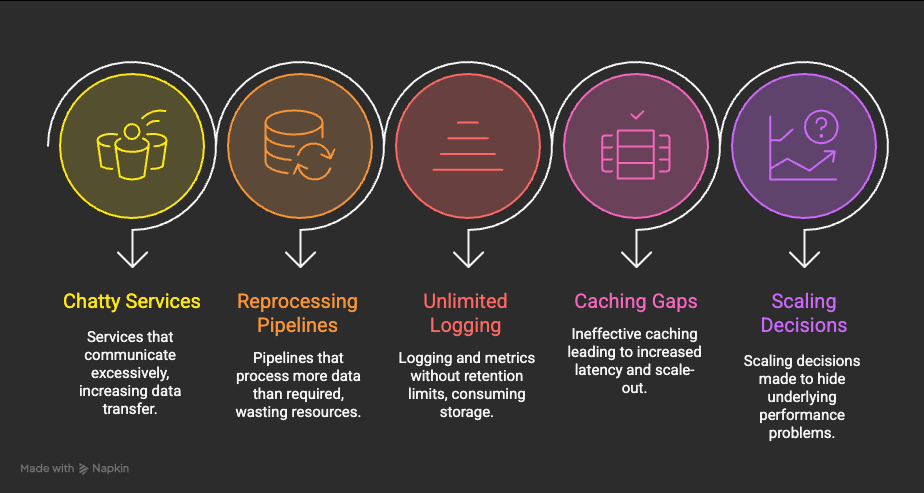

Some of the most persistent inefficiencies show up as healthy resources doing expensive things:

None of these naturally collapse into a single “stop” or “resize” recommendation. An environment can score well while still producing a high cost per unit of business value.

Risk, stability, and data posture are not part of the score

The metric does not reflect reliability, recovery posture, or dependency complexity. It does not tell you whether a recommended action is safe in the context of your system. It estimates savings. It does not model consequences.

Cost efficiency and cost reduction are not the same thing

One thing this score does not attempt to answer is whether total spend will go down.

It is entirely possible for cost efficiency to improve while overall spend increases. Growth, new workloads, higher availability targets, security controls, and AI adoption all push spend upward even as environments become more efficient.

The score is best understood as a directional signal about visible optimization opportunities, not as a guarantee of lower bills. When those two ideas get conflated, expectations drift quickly.

The strategy layer: helpful signals, incomplete context

Cost Optimization Hub recommendations are grouped into familiar strategy categories: commitments, stop or delete actions, scaling and rightsizing, upgrades, and Graviton migrations.

These strategies exist for good reasons. They represent well understood levers in cloud cost management. At the same time, the strategies are intentionally generalized. They are designed to apply across thousands of environments with very different architectures and risk tolerances. That generalization creates gaps.

Stopping or deleting resources, for example, does not automatically eliminate all associated costs. Storage, snapshots, backups, networking components, logging, and data transfer can continue independently of the original resource. In other cases, removal actions can intersect with data retention, recovery assumptions, or downstream dependencies in ways the recommendation engine cannot fully see.

Similarly, commitment recommendations can optimize unit rates while narrowing flexibility. Rightsizing can lower baseline costs while introducing performance sensitivity. Upgrade and migration paths can unlock efficiency while requiring meaningful operational change.

None of this makes the recommendations wrong. It just means they are signals, not conclusions.

Closing thought

I am glad AWS introduced the Cost efficiency score. It lowers the barrier to starting efficiency conversations and makes common opportunities easier to see.

I just see it as an early marker, not a finish line.

Cloud efficiency is still something teams uncover gradually, by combining tooling signals with architectural understanding and business context. The score helps illuminate part of that path. The rest comes from continuing to ask how systems behave, how value is created, and where cost and outcomes drift apart over time.

That work is ongoing, and that is okay.

RESOURCES

The Burn-Down Bulletin: More Things to Know

AWS Cost Optimization Hub introduces Cost Efficiency metric to measure and track cloud cost efficiency

The clean “source of truth” announcement: what the score is, what it includes (rightsizing, idle, commitments), and the fact it refreshes daily. Handy for aligning stakeholders on the official definition.AWS Cost Efficiency Metric: A Unified Approach to Cloud Cost Optimization (OpsLyft)

A solid third-party breakdown of how the metric works and how to use it without turning it into a vanity KPI. Good framing for engineering and finance translating the same score differently.5 Reasons New AWS Cost Efficiency Metric Will Transform Your Cloud Spending in 2025 (TruCost.Cloud)

Walks through the formula and what’s bundled into “potential savings,” plus practical ideas like benchmarking accounts/teams and tracking changes over time. Take the vendor pitch with a grain of salt, but the explainer section is useful.AWS Cost Optimization: 11 Tools and 11 Critical Best Practices (CloudQuery)

Great “zoom out” companion: it puts Cost Optimization Hub (and the efficiency score mindset) in context with the rest of the tooling stack, so you don’t confuse a better score with better architecture.Strategies for AWS Cost Optimization (DigitalOcean)

A straightforward guide that explains cost efficiency as an ongoing operating model, not a one-time cleanup. Also calls out Cost Optimization Hub as the central place AWS wants you to start from.

Introducing: Video Shorts!

I am going to be including video shorts in most newsletters moving forward as a way for the community to get to know me better AND to facilitate a new way of thinking about the work we do. My goal is to take concepts of cloud architecture and apply them to the way we live, think, and interact with the world surrounding us.

That’s all for this week. See you next Tuesday!