FINANCE

Why Efficiency Needs Continuous Evaluation

Cloud efficiency is often evaluated through static benchmarks. Utilization targets, cost-per-resource ratios, and predefined thresholds meant to signal whether a workload is operating "well" or drifting into waste.

These benchmarks can be useful snapshots, but they rarely hold up over time.

Cloud environments change constantly: workloads evolve, architectures shift, business priorities move. What looks efficient today may be misaligned tomorrow, even if nothing is technically broken.

In modern cloud environments, efficiency is not a fixed state. It reflects how systems are used, scaled, and adapted in production. Managing it well requires ongoing evaluation, not periodic validation.

When Workloads Move Faster Than Benchmarks

Workloads rarely behave the same way for long. Seasonality is the most obvious example. In retail and other consumer-facing systems, demand spikes around predictable events. Those spikes are expected, but they still distort utilization benchmarks that were calibrated during quieter periods. A system can look inefficient on paper while operating exactly as designed.

Seasonality is only one version of a bigger pattern. Benchmark drift tends to show up in four common environments:

Each of these reflects a change in underlying workload behavior. The system evolves and yet, the benchmark does not.

In event-driven environments, traffic shifts abruptly around launches, promotions, or external triggers. In product-led environments, new features or customer tier expansion materially change concurrency and latency sensitivity. Data services that move from batch to real-time workloads operate under entirely different demand curves. And sometimes resources simply drift as ownership changes and governance weakens.

How Architectural Change Distorts Efficiency Signals

Architecture changes reshape cost, even when the change is intentional

Teams refactor for good reasons: reliability, performance, delivery velocity. But every architectural shift changes how resources are consumed and how cost flows through the system.

Why static benchmarks break down:

They assume cost drivers stay stable over time

When a service splits, traffic patterns change

When a dependency is added, retry behavior and latency sensitivity shift

When a workload moves regions, baseline capacity, redundancy, and data transfer paths often change with it

Two common patterns where cost rises alongside improvement:

Multi-region deployments:

Performance and reliability improve

But duplicated capacity and higher baseline consumption become part of the design

Benchmarks built on single-region utilization no longer reflect how the system actually operates

Monolith to microservices:

Each service carries its own compute, storage, and network overhead

Inter-service communication introduces latency that didn't exist before

Teams add caching, message queues, and observability to manage the distributed system

Total resource consumption increases, not because efficiency dropped, but because the architecture distributes the same workload across more components, each with its own baseline cost

Where the disconnect shows up: When spend rises after an architectural change, finance sees a variance that needs explaining and engineering sees a system that is working better. Neither is wrong. What is missing is the shared context that connects the decision to the cost outcome. Without it, both sides are reasoning correctly from incomplete information.

Shifting from benchmarks to continuous cost awareness

Sustained cloud efficiency requires a different operating mindset. Rather than relying on fixed thresholds, teams maintain continuous awareness of how systems behave as conditions change. Benchmarks still exist, but they function as reference points, not absolutes.

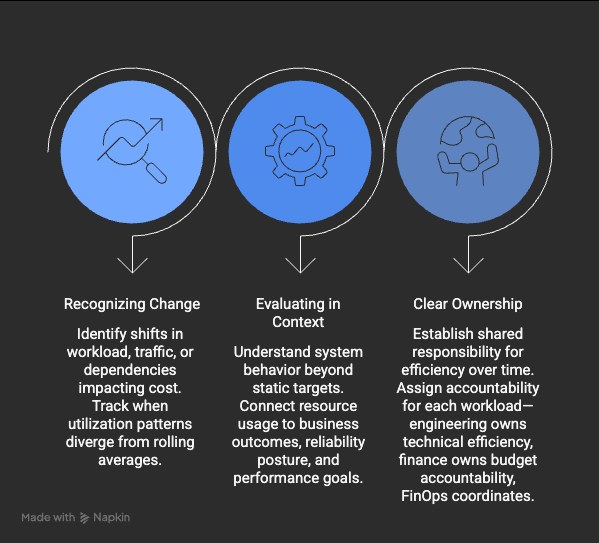

This approach centers on three practices:

Why This Matters to Finance and Procurement

For finance teams, static benchmarks often create false confidence.

A workload can meet utilization targets while drifting away from its intended cost profile. Without context, variance looks like a forecasting error instead of a system behavior shift. This creates friction when finance presents budget variance to leadership. The numbers show overspend, but engineering insists everything is running as designed. Both perspectives are accurate, but without a shared understanding of how the workload evolved, the conversation becomes defensive rather than productive.

Continuous efficiency awareness helps finance distinguish between growth-driven cost changes and true inefficiency. That distinction matters when building forecasts, explaining variance, and defending budget decisions. It also helps finance anticipate where costs will move next, rather than reacting to surprises after they hit the P&L.

Think about a finance team forecasting cloud spend based on historical utilization trends. If a major architectural shift happens mid-quarter (like migrating from VMs to containers, or introducing a caching layer) those historical trends become unreliable predictors. Without visibility into what changed and why, the forecast misses. With continuous awareness, finance sees the architectural shift coming, adjusts assumptions, and communicates the expected cost impact before it becomes a variance explanation.

For procurement, static benchmarks can mask long-term risk. Commitments, pricing models, and renewal assumptions are often anchored to historical efficiency patterns. When workloads evolve and benchmarks lag, procurement decisions rely on outdated views of how infrastructure will behave.

A procurement team negotiating a Reserved Instance (RI) or Savings Plan commitment typically looks at past utilization to project future coverage. If those utilization patterns shifted due to an architectural change, the commitment might under-cover actual usage or lock in capacity the team no longer needs. Either scenario creates financial inefficiency that shows up as wasted commitments or missed savings opportunities.

Understanding efficiency as something that evolves over time allows procurement teams to ask better questions before contracts are signed and renewals are locked in. Instead of assuming historical patterns will hold, they can validate whether current workload behavior supports the commitment structure. This reduces the risk of locking in inflexible capacity just as the system is about to change.

Static benchmarks create the illusion of control. Continuous awareness creates the conditions for it. That is where FinOps earns its place, by keeping all teams grounded in the same version of reality.

Efficiency Requires Constant Attention

Cloud environments are dynamic by design. When efficiency is judged against static benchmarks, teams are always reacting to the past.

The benchmarks that worked last quarter might not work this quarter. The assumptions behind last year's capacity plan may not survive a migration, a new feature, or a shift in customer behavior.

This does not mean obsessing over every dollar or chasing perfect utilization. It means staying aware, asking whether current efficiency metrics still reflect current reality, and updating benchmarks when the answer is no.

When that awareness is shared across finance, engineering, and procurement, efficiency stops being something teams debate after the fact and starts being something they maintain together. Not as a quarterly exercise or an annual audit, but as an ongoing practice that moves with the system.

Cloud environments do not pause between reviews. Efficiency thinking should not either.

POLL: What do you want to see more of in FinOps Cash Flow?

Still searching for the right CRM?

Attio is the AI CRM that builds itself and adapts to how you work. With powerful AI automations and research agents, Attio transforms your GTM motion into a data-driven engine, from intelligent pipeline tracking to product-led growth.

Instead of clicking through records and reports manually, simply ask questions in natural language. Powered by Universal Context—a unified intelligence layer native to Attio—Ask Attio searches, updates, and creates with AI across your entire customer ecosystem.

Teams like Granola, Taskrabbit, and Snackpass didn't realize how much they needed a new CRM. Until they tried Attio.

RESOURCES

The Burn-Down Bulletin: More Things to Know

Cost-Aware Architecture: Treating Cloud Cost as an Engineering Constraint This article examines why treating cost as a post-deployment concern creates surprises, and how modeling workload behavior before infrastructure is provisioned helps teams discuss trade-offs with real context. When cost becomes a property of the system rather than a line item, cloud bills stop being surprising and become understandable.

Top 8 Cloud Cost Optimization Strategies for Modern FinOps Teams Cloud optimization should be treated as an ongoing process, not a one-time campaign. This guide explores how automation transforms FinOps from a reactive function into a continuous, self-improving system by integrating telemetry data with financial insights to dynamically adjust optimization tactics as workload behavior shifts.

Without a deliberate strategy, teams either overprovision and overspend or underprovision and lose performance. This piece walks through the trade-offs between cost and performance in cloud architecture, emphasizing the need for repeatable processes grounded in clear metrics that evolve as workloads and the cloud landscape shift.

While the cloud may have started as the most cost-effective place to run a workload, it doesn't always stay that way. This article examines how numerous factors impact cloud economics as businesses grow, and why the pay-as-you-go model becomes problematic when workload characteristics change from variable to constant baseline demand.

That’s all for this week. See you next Tuesday!